WHY IS THERE SO MUCH FAKE STUFF on the Internet? Perhaps it has something to do with a rare civilizational transformation that is taking place right now—a transformation that is challenging our relationship with information in ways that alter the very perception of existence. No longer content to live within the confines of the physical world, the global population moves seamlessly between alternate realities made possible by radical innovations in technology. What began with the campaign to relocate as many aspects of our lives as possible into the smartphone has accelerated during the COVID-19 pandemic, with both work and play for many becoming virtualized. The Internet presently exists as an extension of the world’s imagination, its data centers filled to the brim with competing fictions. This has been a boon to creativity, and may be jumpstarting a cultural renaissance like none other. But myriad dangers lurk here as well.

As the popular press reminds us on a daily basis, many bad things are hatched in the darker corners of the Internet. And those bad things are frequently underpinned by the pervasive barrage of falsehoods on social media. This has been singled out as a pivotal problem of our time—usually in alarmist terms. For instance, a recent report published by the Pew Research Center, a nonpartisan American think tank that studies social concerns, likened the Internet to a weapon of mass destruction merely because it is a participatory space. According to the report, “The public can grasp the destructive power of nuclear weapons in a way they will never understand the utterly corrosive power of the internet to civilized society, when there is no reliable mechanism for sorting out what people can believe to be true or false” (Anderson and Rainie 2017).

Blame for this tends to be assigned to specific social technologies and those responsible for creating them. If only we could trust-bust, reform, and regulate our way out of the post-truth stalemate, the global political crisis unfolding around us could be resolved (Zuckerman 2021). Or so the current thinking goes, reinforced by a growing cadre of activists, academics, and policy makers—all echoing a now-familiar narrative constructed from news-media coverage of extraordinary events tied in some way to the Internet. But this line of inquiry betrays a certain naïveté about the online experience. Do all falsehoods necessarily mislead us? Are those who produce false content always malicious? What would happen if media that facilitate the widespread dissemination of fictions were strictly regulated or even banned? Who even has a good grasp of what those media are and how they work?

Thoughtful inquiry into the matter of fake content on the Internet has been rare. There simply hasn’t been much interest in the historical development of such content or the social role it has played since the dawn of global information networks in the late 1960s. This is surprising, because at a fundamental level, fake stuff is interesting to everyone and always has been (McLeod 2014). Long considered a locus of rationalism, today’s Internet is more often than not pushing against facts and reason as it serves as a conduit through which popular stories move. The Portuguese political scientist Bruno Maçães has argued in his recent book History Has Begun that imaginative mythmaking on the Internet is upending society in ways that were inconceivable a generation ago (Maçães 2020a). This, he tells us, is a phenomenon that we should not fear but instead embrace. In his words, “Technology has become the new holy writ, the inexhaustible source of the stories by which we order our lives.” Yet there can be no doubt that within these stories there is tension between creative act and malicious deception, especially when both employ the same set of software tools to achieve their ends.

What exactly is going on out there in the digital terra incognita? This is a complex question. But if we could answer it even partially, we would have a better understanding of the economic, political, and social landscape of contemporary life. Instead of bemoaning the present state of affairs, in this book we will track a very different critical course by following an undercurrent of inventiveness that continues to influence how fake content is created, disseminated, interpreted, and acted upon today. Maçães, one of the few optimistic voices on this matter, is generous with a vision for this new digital landscape but meager with the evidence for what it constitutes and how it came about. Perhaps we can help him out by filling in those crucial details.

With respect to society’s perspective on reality today, a brief review of both the distant and the near past is helpful to set the stage for the chapters that follow. While it is undoubtedly tempting to jump right into a discussion of state-generated disinformation, right-wing propaganda, left-wing delusions, and other political material, let us leave these for the moment (we’ll come back to them later). Instead, let us consider the ways in which the imagination has directed culture over the centuries. Reality has always been shaped by ideas that are projections of the imagination, an essential aspect of our humanity. The imagination’s move into information networks is the product of social trends related to this phenomenon. Because of this, social technologies have had an outsized impact in the past several decades—and they bear a strong resemblance to some of the oldest known communication mediums.

Why, exactly, should we take a closer look at historical instances of fakery? On the surface, the artifacts that have been left behind appear incidental to the potent social trends we’re interested in; they’re merely the output of some creative process driving those trends. But we don’t have direct access to that process. It is impossible to travel back in time to recover the exact social context in which something appeared, and we do not have enough knowledge of the brain to understand the cognitive mechanisms that were invoked in its creation. By looking at the historical evidence, however, we can observe specific patterns recurring over time in what has been handed down to us. Rather crucially, early fictions are directly connected to those social trends that would upend our contemporary life, and so they are important indicators of what was to come. Why have we always been seeking to transcend the confines of the physical world? Because as humans, we can imagine possibilities beyond it. And this takes us into the realm of myth.

Working in the early twentieth century, the French anthropologist Claude Lévi-Strauss considered the question of how significant myths have been to the organization of society. He noticed an uncanny similarity between the myths of different cultures, from the primitive to the modern (Lévi-Strauss 1969). From this basis, Lévi-Strauss argued that an unconscious production of mythologies is an essential aspect of human thought (and perhaps says something about the underlying organization of the human brain). When confronted with a contradiction, a myth provides a simplification by which the mind can resolve it, thus relieving burdensome anxiety in some cases and creating entirely new possibilities for society in others. While one could be duped by this process, the simplification is often a breakthrough in its own right, allowing one to think through complicated situations with newfound clarity. This is what we tend to describe as creativity.

Lévi-Strauss also made a crucial observation about the experience of myths in practice, specifically, that humanity exists in parallel timelines: the physical world (i.e., the historical timeline) and the myth cycle (i.e., a fictional timeline). Mythical thinking, he argued, was in no way inferior to scientific thinking, but served a different yet equally important role in society:

If our interpretation is correct, we are led toward a completely different view, namely, that the kind of logic which is used by mythical thought is as rigorous as that of modern science, and that the difference lies not in the quality of the intellectual process, but in the nature of the things to which it is applied. This is well in agreement with the situation known to prevail in the field of technology: what makes a steel axe superior to a stone one is not that the first one is better made than the second. They are equally well made, but steel is a different thing than stone. In the same way we may be able to show that the same logical processes are put to use in myth as in science, and that man has always been thinking equally well; the improvement lies, not in an alleged progress of man’s conscience, but in the discovery of new things to which it may apply its unchangeable abilities. (Lévi-Strauss 1955)

The phenomenon of mythical thinking extends far beyond a single person dreaming up a myth in isolation. It is a palpable manifestation of a collective unconscious, informed by shared information—all members of a community operate within the same frame of reference (Campbell 2008). A part of the relief one feels when drawing on a myth in their own life is knowing that others are doing the exact same thing, perhaps even sharing in the revision of the same contradictory experience. Similarly, a big idea that transforms society is propelled by a crowd that is simultaneously enthralled by it.

Here we can make a connection to the present by considering the relationship between historical myths and Internet myths as they spawn memes. A meme is a cultural artifact that is meant to be transmitted from person to person and that can evolve over time like a biological organism. With the remixing of information in service of this evolution, current circumstances can be integrated into an overarching story and understood within that context. We know the meme today as a bite-size form of storytelling on the Internet, often making use of images or videos, but the general concept describes a highly effective mechanism by which myths of various forms can change and spread over time using any available medium of communication (Dennett 2010).

Current writing on Internet culture dates the genesis of what is now commonly understood to be a meme to the early 2000s, which coincides with the emergence of social networks (Shifman 2013; Mina 2019). But the phenomenon is much older. The evolutionary biologist Richard Dawkins, who originally popularized the notion of the meme in the 1970s, went all the way back to antiquity for his examples of how this process works over long time spans. He astutely argued that the durable meme complex of the philosopher Socrates is still alive and well within our culture, over two millennia after it first appeared (Dawkins 2016). When we look at what came before images and videos on the Internet, we see that historically memes have always encapsulated falsehoods, mythmaking, and culture quite effectively. The only real difference from today is the use of mediums that have long fallen out of favor.

It is, of course, the oral tradition that appeared first. The Homeric poems wove a rich tapestry from ancient Greek mythology and religion and fomented a host of ancient memes. Importantly, there are significant elements of fantasy throughout the stories of The Iliad and The Odyssey, from the actions of the gods to the circumstances of the Trojan War itself. There is no definitive proof that the war actually occurred, yet many ancient and modern people believed it was a real event, in spite of the lack of evidence (Wood 1998). Further, orality entailed changes to the stories, by virtue of people not having perfect memories. Once an acknowledgment was made that the story would be slightly different from telling to telling, the bard had room for improvisation in the performance.1 A large portion of the classical canon is based on novel reworkings of Homer, and the bulk of Greek and Roman culture shares some relationship to The Iliad and The Odyssey, which everyone was familiar with. The denizens of the ancient world weren’t just enjoying the Homeric poems as entertainment; they were, in a sense, living them.

Given the primacy of Homer and the low rates of literacy in the ancient world, the study of myth has (not undeservedly) emphasized the oral tradition. However, this overshadows another early development that is more important for our understanding of Internet culture: the use of visual mediums. We are, after all, visual creatures, relying on our sight to navigate any environment we find ourselves in. Thus an exchange of drawings, paintings, and sculptures is arguably a more natural way for people to communicate—and an even better way to immerse oneself in a myth cycle. Art was fairly accessible in antiquity, meaning ordinary people would possess objects that were creative in nature, often depicting familiar characters and scenes from a well-known story. With this development, The Iliad, The Odyssey, and the general mythological milieu could be transferred as memes via specific visual mediums.

Take, for instance, ancient Greek pottery.2 Vases, bowls, cups, and other household items were frequently decorated in whimsical ways that remixed the classical canon. Pottery became an effective medium for ancient memes because it was in common circulation and could be exchanged far easier than a long poem could be recited from memory. Mass production ensured that the entire population of the Greek world was supplied with the latest iterations of their favorite stories.3 This wasn’t true of what was analogous to visual fine art of the period, which was slower to introduce revisions to the canon and not as accessible to the ordinary person. Remarkably, even in this archaic medium, then, we find strong parallels with content that exists to promote fictions on the Internet today. According to Alexandre Mitchell, a scholar of early forms of visual communication, the painted pot can “tell us something about rules of behaviour, about the differences between the public and the private sphere, about gender difference, ethnicity, politics, beauty and deformity, buying patterns, fashion, perceptions of religion and myth.” Notably, humble pieces of pottery conveyed “what people really thought and experienced” (Mitchell 2009).

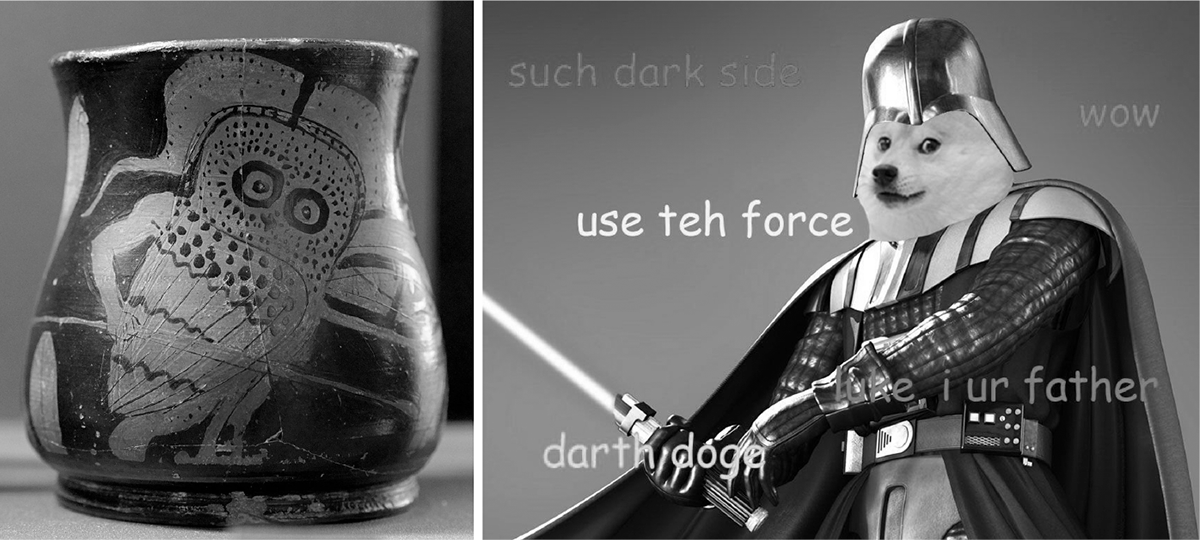

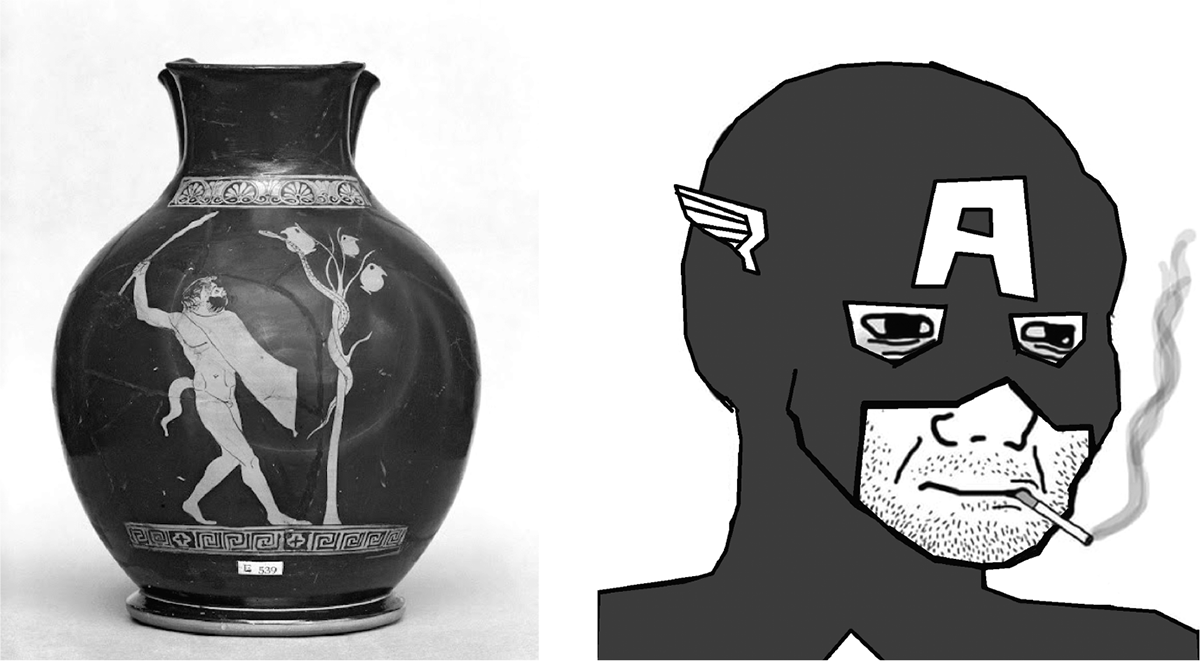

Anyone who is familiar with Internet memes knows that there are certain repeating motifs that are formed by drawing from a collection of standard elements. The same is true of the paintings found on Greek pottery. The use of stock characters was common, and this gave artists significant flexibility in treating a familiar scene. Silly anthropomorphized animals and fantastic creatures like satyrs served the same role as the Doge or Wojak in modern memes (figs. 1.2, 1.3)4—all could be used as comic stand-ins for gods, heroes, and other more serious figures. Many of the painted settings were drawn from the universe of the Homeric poems, similar to the way the settings used in the memes of today are drawn from a specific pop culture universe like that of Star Wars or Marvel Comics. Stock characters and settings were often intertwined with real events like athletic contests or religious festivals, allowing the artist to make commentary on current affairs.

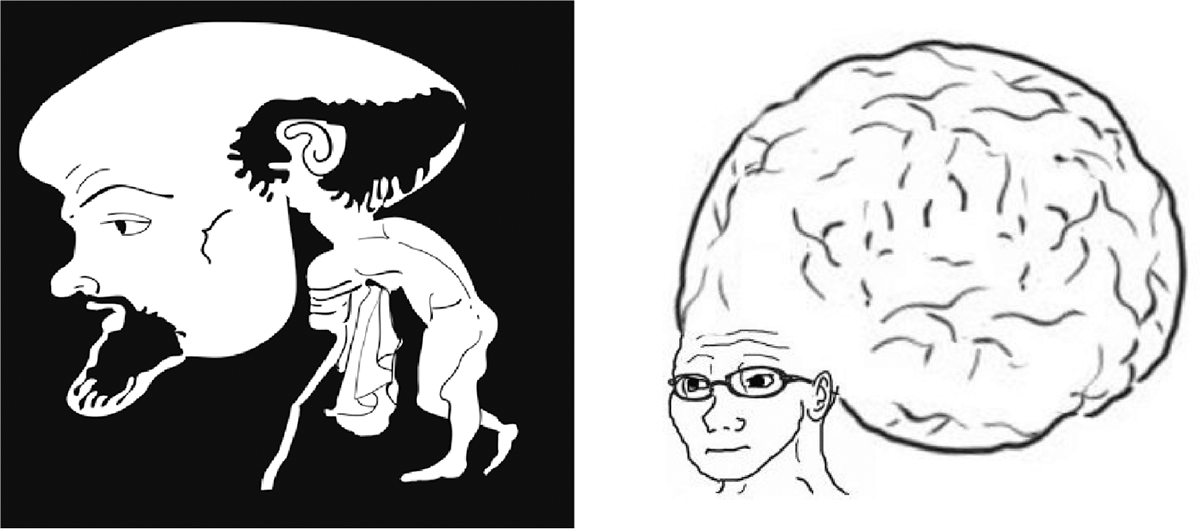

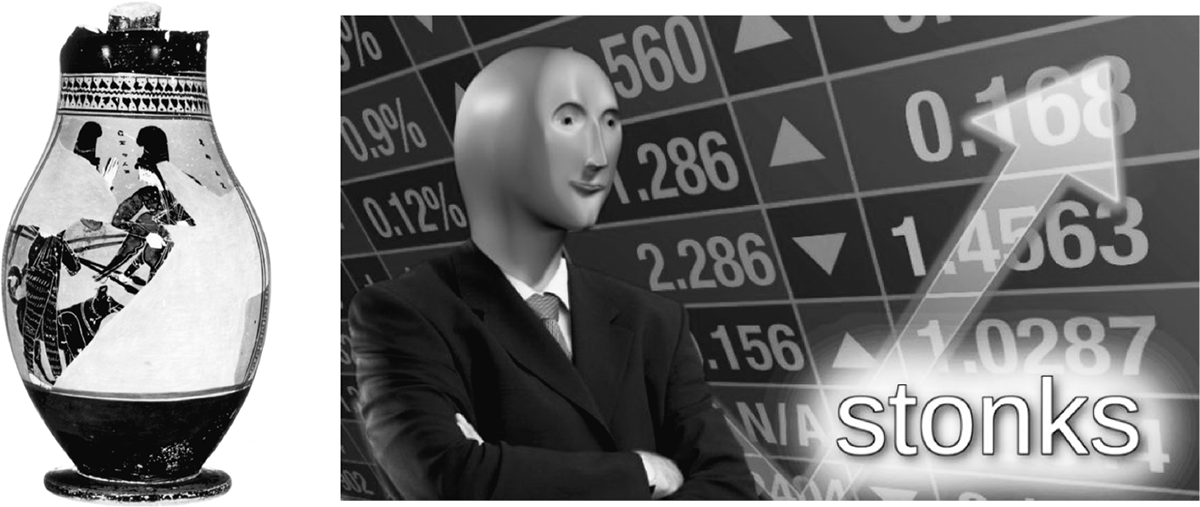

The way in which humor was deployed is significant to our understanding of the mood of a piece (Larkin 2017). Parody emerged as one of the earliest forms of social commentary, offering a humorous alternate reality within an alternate reality. One common form it took was absurdist comedy. For instance, the body parts of depicted figures could be exaggerated to emphasize some trait—something we find in popular Internet tropes like the “big brain,” which is used to make ironic statements about poor decision making (fig. 1.4). Nonsense writing, particularly confounding to archeologists, was also present in various scenes (Mayor et al. 2014). While many explanations for it exist (e.g., misspelling, meaningless decoration), it can function as an absurdist element, with the context providing clues as to how to interpret the joke. When we consider similar modern instances, like the “meme man” genre of memes,5 which highlights the absurdities associated with the culture of Wall Street, this makes perfect sense (fig. 1.5). Humor has not changed much over time, and it remains an effective form of criticism within a myth cycle.

While less accessible than visual mediums, ancient long-form writing should not be discounted, as it was a prototype for later writing in periods of higher literacy. The search for historical analogs to what we today call “fake news” has revived debate over the credibility of the Greek writer Herodotus and the tone he set for later writers chronicling significant events (Holland 2017). Peppered throughout his Histories, which is widely regarded to be a foundational work of scholarship in the West, are tales that can’t possibly be true. For example, embedded in a mostly accurate report on the geography of Persia is the following account of the local insect life:

In this sandy desert are ants, not as big as dogs but bigger than foxes; the Persian king has some of these, which have been caught there. These ants live underground, digging out the sand in the same way as the ants in Greece, to which they are very similar in shape, and the sand which they carry from the holes is full of gold. (Godley 1920a)

In antiquity, how often were such stories recognized as fake? Were ancient commentators skeptical of their own culture’s histories? Writing hundreds of years after Herodotus, Pliny the Elder matter-of-factly reiterated the story of the Persian ants in his own Natural History (Bostock 1855). If fact-checking existed in the Mediterranean two thousand years ago, no evidence of it has been handed down to us.

The contemporary explanation for stories like the one about the ants is that in the past people were simply ignorant, living in intellectual darkness until the dawn of the Enlightenment in the late sixteenth century. Scientists are especially prone to promoting this explanation, which itself turns out to be a gross misrepresentation of the way in which information was exchanged and understood for centuries.6 The ancient reception of historians like Herodotus and Pliny was quite different from the reception of today’s scholarly histories. In the Greek world, there was a certain expectation that past events would be blended with elements of myth when recorded, in order to convey the relevant cultural context that wouldn’t be directly available in a simple recounting of the original event (Romm 1998). This provided a richer (and in many cases more entertaining) understanding of the event to the person experiencing the retelling. By the editorial standards of today’s most serious publishers, such reporting would obviously not pass muster. But it’s not unlike what happens in more popular forms of writing that are prevalent on the Internet like tabloid journalism and conspiracy-theory-laden social-media posts.

The evolution of writing eventually led to the invention of the novel. Should we be concerned about that medium in our discussion of the development of fake things? On the one hand, the novel announces itself as a work of fiction—we aren’t meant to believe it. Yet on the other hand, the novel is necessarily a manifestation of fakery—the author had to invent the characters and the world in which it takes place. Crucially, the ancient novel also existed within the myth cycle, blending the very same elements that were found in other forms of creative composition like poems and histories. Early examples of the form like the Metamorphoses by Apuleius (Adlington and Gaselee 1922) and Daphnis and Chloe by Longus (Henderson 2009) also drew on the familiar settings and characters that were available in the catalog of Greco-Roman myth.

To the overly pedantic observer, everything we have discussed thus far should be rejected out of hand if it is being used for more than just entertainment. After all, a reasonable person should not be fooled into thinking that stories with clearly fictional elements like satyrs, heroes, giant ants, and the like are, in any regard, true. This begs the following question: is mythmaking distinct from lying and its more ignominious cousin, bullshitting? The answer is yes. According to the philosopher Harry Frankfurt, a lie is an attempt to deceive someone in order to achieve a specific objective in a tactical (i.e., limited) sense, while bullshit is largely vacuous speech that is a manifestation of the utter disregard for truth (Frankfurt 2009). A myth is both contentful and strategic (i.e., global) in its aims. It is the foundation of something large that builds connections between people—something we all strongly desire. Lying and bullshit are associated with the negative behavior of individuals, while myths are the collective expression of an entire community and are meant to be a reflection of the human condition. We cannot live without them.

If the inclination to tell meaningful stories explains why there is so much fake stuff on the Internet, then the situation we find ourselves in is ultimately good. Memes are not lies (they are too creative and typically aren’t trying to achieve an immediate, limited objective) nor are they bullshit (they are more information-rich). In general, the bulk of fictional writing on the Internet doesn’t conform to either type of problematic content. Instead it supports contemporary myth cycles. That is not to say that myths don’t influence behavior in any way. In some cases, that is the intent.

Kembrew McLeod, a professor of communication studies at the University of Iowa, has written extensively about the prank as an operationalized form of mythmaking. He defines it with a simple formula: “performance art + satire × media” (McLeod 2014). The performance-art component makes the prank a provocation, sometimes involving direct engagement with an audience in order to get them to do something. In addition, pranks lean heavily on humor, with a satirical component meant to stimulate critical thought. Unlike hoaxes, they are not often malicious in nature. Historical prankster Jonathan Swift is fondly remembered for pushing the boundaries of what his audiences could tolerate with shocking jokes that possessed some element of ambiguity. For instance, Swift’s satirical commentary on the eighteenth century Irish economy in the form of a pro-cannibalism pamphlet titled A Modest Proposal, which suggested that the poor of Ireland should sell their children to the rich as food, has triggered recurring moral panic over the course of several centuries. Which, in turn, has made his joke funnier for those in the know, as each misunderstanding further emphasizes the critique Swift was trying to make about the neglect of the poor. The multiplicative media component of McLeod’s formula stands out as being particularly important to the success of a prank: technology acts as an accelerant. By the time Swift arrived, the printing press had enabled writers to have a broad and enduring impact.

Already at the dawn of civilization, then, all of the ideas were in place for the construction of the Internet myth engine. Yet it took a couple thousand years to get to where we are now. For much of that time, myth cycles were guarded by the clergy and aristocracy, with things proceeding as they had since antiquity. A sharp separation between lived fictions and observable phenomena began after the Thirty Years’ War in Europe, with the newly emergent bourgeoisie’s push to marginalize the supernatural, which it alleged was a tool used by clerical elites and the landed gentry to control everyone else (Piketty 2020). The motivation for doing so was just business: the belief that rational markets would increase wealth caused a radical reorganization of society and culture that was, in many ways, completely alien to the ordinary person. The separation would prove to be temporary, however—it’s easier to topple a monarch than a fundamental human need (Douthat 2021b). The myth cycles now exist digitally on the Internet, where they are increasingly supported by AI and are more influential than ever.

This convergence of myth and technology had been anticipated in science fiction at the dawn of the Internet era, most notably by the writer William Gibson in his novel Count Zero (Gibson 1986). In Gibson’s vision of the future, AIs take the form of Haitian voodoo gods in cyberspace in order to interact with people in a more appealing way. In a purely technological setting, it’s easy to dismiss the appearance of anything resembling mythical thought if one possesses a purely rational mind. In a sense reminiscent of Lévi-Strauss, one of Gibson’s characters discusses the difference between mythical and scientific thinking in the new convergence between religion and technology that is appearing, realizing that one shouldn’t be too quick to dismiss apparent impossibilities:

We may be using different words, but we’re talking tech. Maybe we call something Ougou Feray that you might call an icebreaker, you understand? But at the same time, with the same words, we are talking about other things, and that you don’t understand. (Murphy 2010)

In the novel, the voodoo spirit Ougou Feray is equated with a fictional computer hacking tool Gibson called an “icebreaker.” Both represent the idea of unfettered access: the ability to go where others cannot. Yet in the passage above there is recognition that modes of thought could be radically different from person to person, even when expressed in the same exact language, blurring the separation between the rational and mythical. The early days of the Internet brought Gibson’s vision of the future to fruition: digital myth cycles laden with ambiguity.

In the controversial 2016 BBC documentary HyperNormalisation, the filmmaker Adam Curtis suggested that contemporary life has become a never-ending stream of extraordinary events that governments are no longer capable of responding to (Curtis 2016). The reason for this, according to Curtis, is that politicians, business leaders, and technological utopians have conspired to replace the boggling complexities of the real world with a much simpler virtual world, facilitated by revolutionary advances in computing. By retreating to the virtual world, it’s possible to escape the everyday trials of terrorism, war, financial collapse, and other catastrophes that regularly plague the globe, without confronting them in any serious way. The film is both compelling and highly entertaining, introducing its audience to an array of eccentric figures, famous and obscure, who have had an outsized influence on the virtualization of life. Critics were mixed in their reactions to the film, in some cases not being able to make sense of the patchwork of concepts presented (Harrison 2016; Jenkins 2016). Given its coverage of everything from UFOs to computer hackers, one couldn’t quite blame them. But this reflects the tangled logic of the Internet we now inhabit.

What Curtis is really portraying in HyperNormalisation is the resurrection of the myth cycle during a specific period of the twentieth century. The film establishes that the process began during the 1970s and accelerated through the 1990s dot-com boom. What exactly happened in the 1970s to trigger this? Curtis believes that it was the activities of several key politicians of the era, including Henry Kissinger, the US secretary of state, and Hafez Al-Assad, president of Syria. Both injected chaos into the international system, with the consequence of a global pullback from the resulting unmanageable complexities. However, this wasn’t the only contributing factor. Contemporaneous trends in technology development were supported by the flight from complexity and related popular demand to return to the livable fictions of earlier centuries. The primary developments coming out of this were new communication mediums.

In the 1960s, sophisticated information networks began to connect to aspects of everyday life that previously had been untouched by computers. This started with the move to digital switching in the telephone network, which streamlined the routing of calls and made it easier to connect computers that could communicate over the phone lines. Demand for more bandwidth led to the development of better networking technologies throughout the 1970s. Ethernet, a high speed local area network (LAN) technology, was invented by Xerox’s Palo Alto Research Center in 1973. The TCP/IP protocol suite, the language the Internet speaks to move information from point to point, was created for the Department of Defense’s ARPANET (the precursor to the Internet) in the early 1970s (Salus 1995). Bell Labs, the storied innovation hub of the Bell System (Gertner 2012), pioneered a host of Internet technologies in the same decade, including the UNIX operating system (Salus 1994), a server platform that would later make cloud computing possible. All three technologies would become indispensable to the operation of today’s Internet.

To savvy product designers at technology companies in the mid-twentieth century, the universal popularity of the phone system hinted at the potential for widespread adoption of computer networks by the general public. The new information networks would be highly interactive, moving more than just audio signals, which made the difference for ushering in drastic societal change. This was very different from print media, radio, and television, which were passively consumed. Information flowed both ways on the nascent Internet. Writers like Gibson noticed that this was happening and imagined how these networks would be used in the future. All signs pointed to a massive disruption in the prevailing social order, with elaborate fictions taking shape within a cyberspace that would be actively experienced.

Even allowing for these two catalysts of virtualization, it’s mystifying that a globalized society conditioned by the idea of rational markets was not able to keep humanity’s impulse toward myth at bay. Why did that happen? The technology investor Paul Graham has speculated that a refragmentation of society, made possible by networked computers exposing users to the entirety of the world’s ideas, drew people away from the homogenized national cultures that had developed after the Second World War as a mechanism of behavioral control (Graham 2016). National television might have brought a country together each evening, but the programming wasn’t really engaging to everybody. Similarly, a small number of oligopolistic corporations may have streamlined the structure of the economy, but they limited consumer choice. These systems were developed for efficiency during the war, but they were also a natural extension of the dismantling of the pre-Enlightenment world. From a policy perspective, such a setup stabilized society. Everyone was on common ground and directed away not just from countercultural ideas but also from any vestiges of the old religious and cultural traditions that pushed back against purely scientific explanation of observable phenomena. It turned out that not many people wanted to live that way. As soon as it was possible to fragment into myth-driven tribes again, people jumped at the chance. Early technology platforms supported, and in some cases even encouraged, this.

At the root of this refragmentation isn’t the cliché of a lost soul wanting to find their true self. There is a fundamental resistance to the organization of society based on rationality, where individuals are defined only by their role in the economy. The philosopher Byung-Chul Han has posited that the abandonment of myth cycles in favor of markets didn’t just limit our choices, it made us very ill (Han 2020). According to Han, depression, attention-deficit hyperactivity disorder, schizophrenia, borderline personality disorder, and other neurological disorders of the day aren’t diseases in the traditional sense. Instead, they are the symptoms of excessive striving in a chaotic reality many feel there is no easy escape from. Without a check on excessive initiative, whether through intimate social relationships, religious practice, or other cultural traditions that appear irrational to the market, it’s easy to fall prey to behavioral patterns that are harmful. This is exactly what the contemporary economy fosters. As Han has explained, “Where increasing productivity is concerned, no break exists between Should and Can; continuity prevails.” Han’s view is no doubt controversial, but it explains much. The move to a simpler, virtualized reality is the treatment many of us are seeking, without even realizing.

One often forgets the sincere optimism of the early days of the Internet, which stemmed from the promise that Han’s dilemma and others could be resolved through technology. HyperNormalisation explored this idea by invoking the figure of John Perry Barlow, the longtime lyricist for the Grateful Dead and cyberlibertarian extraordinaire, who anticipated the construction of a viable alternative to conventional life, which he too believed had become dominated by work (and bland, passive forms of entertainment). Something had to be done to relieve the resultant alienation. Writing in the 1990s, Barlow proposed that “when we are all together in Cyberspace then we will see what the human spirit, and the basic desire to connect, can create there” (Barlow 2007). His vision was for users of the Internet not just to consume the vast amount of information available but to interact with it by contributing to the burgeoning artistic, political, and social movements assembling there. That optimistic spirit still lives on in some capacity today, in spite of the hand-wringing from political commentators over the alleged destructive nature of participatory media. The realization, however, was far different from what Barlow, a holdover from the 1960s, had hoped for.

To achieve Barlow’s vision would require restyling reality in a way that would reverse the forces of alienation present in the physical world. To this end, early Internet users created content by taking something real and changing it at will to satisfy the creator and the community they intended to share it with. This activity would become explosively popular in the smartphone era, but more often than not the products had little to no connection with hippie idealism. The human spirit is gloomy at times, spinning dense webs of frustration and anxiety as reflected in shared multimedia posts. It’s surprising, in retrospect, that Barlow did not foresee this, as it had been happening all along in illustration, photography, creative writing, and many other endeavors that would move to the Internet. The key difference was that originally, ordinary people couldn’t effectively remix content at scale. With the Internet and accessible software, now they could.

Paradoxically, the simplification of life into virtual reality led to new complexities. Curtis, in his film, points to computer hackers as transcendent figures who, with their ability to move freely through the Internet, were able to detect that new exploitative power structures were taking root there. Chronic problems facing the richest countries were being reduced by corporations and governments to storyboards, to which new plot elements could be added or removed at will. But such virtualized responses did nothing except change perceptions. Over time, hackers learned how to take control of communications mediums and modify the storyboards used in conjunction with them for their own purposes. The simplification strategy was brittle, and the activities of the hackers that exploited this brittleness proved to be a useful prototype for later, less benign, actors. Similarly, Graham’s refragmentation hypothesis was originally posed to explain the recent phenomenon of aggressive political polarization, which he attributed to an unanticipated consequence of Internet movements and their own storytelling activities. This is not to say that effective responses can’t be mustered from virtual reality. In fact, new ideas there that combine real and creative elements might be key to solving the world’s most pressing problems.

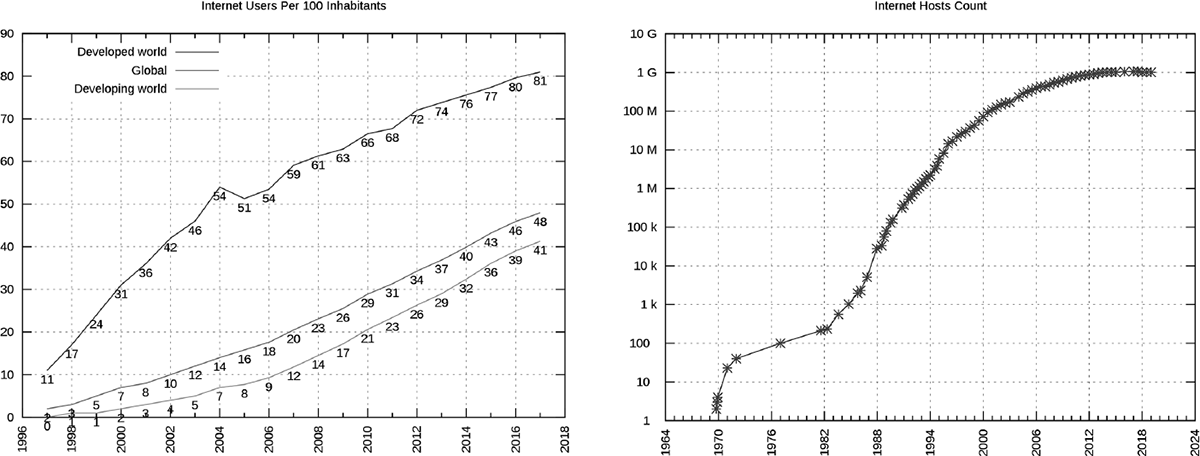

When Barlow first imagined the possibility of a collectively creative high-tech future, the Internet was, relatively speaking, rather small. It consisted mostly of university and government computers, along with a handful of corporate machines. People would temporarily connect to the network via slow dial-up telephone connections and didn’t spend much time using it. According to the United Nations International Telecommunications Union, a mere 2 percent of the globe was connected to the Internet in 1997 (International Telecommunications Union 2007). The sheer scale of the Internet in the present decade is a marvel of human achievement—nothing else in technology comes close to rivaling its capabilities and reach (fig. 1.6). This infrastructure is the cradle of the fake material we are interested in.

The statistics for today’s Internet reveal explosive growth in connected devices, computing power, and, most importantly, content. A recent report by the defense technology company Northrop Grumman noted that in 2016 a zettabyte of data, or one trillion gigabytes, was transferred on the Internet for the first time (Bonderud 2020). This reflected a dramatic increase in multimedia content such as the images and videos being posted to social media, as well as the proliferation of networked mobile devices with cameras. By 2022, the number of devices connected to the Internet was predicted to number between 12 and 29 billion (Fadilpašić 2019; Maddox 2018). And many of those devices would be smartphones: in 2020, 3.5 billion smartphones were already in circulation (Newzoo 2020). The cloud provider Domo has estimated that 1.7MB of data is now generated each second for every person on the planet, reflecting pervasive mobile connectivity (Domo 2017).

The enormity of the system of backend servers managing the data flowing to and from all of those phones cannot be neglected. Vast cloud-computing platforms maintained by a handful of large technology companies like Amazon and Google have concentrated the Internet’s data into centralized locations, making it easier to manage and exploit (de Leusse and Gahnberg 2019). The exact size of these cloud platforms has never been publicly disclosed, but to anyone using them to host a website or app, their resources appear limitless. According to the Wall Street Journal, in 2015 Amazon was adding more computing capacity than it had in all of 2004 every single day (McMillan 2015).

Boundless pools of user-generated data and corporate clouds have made social media a global sensation. Seven out of every ten Americans are now social-media users, and usage cuts across all demographics (Pew Research Center 2021). In China, the most popular social networks, like Weibo, attract hundreds of millions of users (Ren 2018). With everyone having more time on their hands during the pandemic, smartphone use is on the rise. The average American now spends 4.2 hours per day on their phone (Perez 2021), compared to 4.7 hours for the average Chinese (Liao 2019). Importantly, because social-media platforms are inherently interactive, every user with a smartphone is a content creator, contributing whatever they want to the universal pool of data on the Internet. Putting these numbers together, that’s a lot of capacity and time to work with.

When it comes to the images, videos, and everything else being obsessively shared by users of social media, nearly all of it has been altered in some way, from smartphone cameras silently changing our photos to make us look better to Internet trolls making insidious changes to historical events with Photoshop. This poses a dilemma: if the truthfulness of a great deal of the data found on the Internet is in question, what implications does that have for its subsequent use? Should a photo that is automatically altered by a camera be treated the same way as a photo that is intentionally altered by a skilled human artist? There are no simple answers to such questions. But that hasn’t stopped forensic specialists skilled in data analysis from trying to provide some—especially via algorithmic means.

How much of the Internet is fake, anyway? Like the size of the corporate clouds, this is currently unknown. However, the best estimates indicate that less than 60 percent of web traffic is human generated, the bulk of users on various social-media platforms are bots, and the veracity of most of the content people are consuming is in question (Read 2018). Thanks to the open nature of the Internet, any data found there—be it files or collected user behavior—will inevitably be employed elsewhere. Partly this reflects the big-data business plan: users provide data to a company in exchange for being able to use a platform at no cost. All of the text, photos, and videos are then used by the company to enhance its products and improve user experience. That much of the data is not original and has been altered in some way leads to a bizarre outcome: each enhancement pushes us further into a hallucinatory realm. Because the platforms are inherently social, the data is necessarily public, and can thus be used as the raw material for any endeavor by anyone. Enter AI.

Today’s AI systems are trained with enormous amounts of data collected from the Internet, and they’re demonstrating success in numerous application areas (Zhang et al 2021). How powerful has AI become? There is much fear, uncertainty, and doubt around this question (Ord 2020). AI reached an inflection point in 2012 with the demonstration that deep learning, that is, very large artificial neural networks, could be exceptionally effective when the computational power of Graphical Processing Units (GPUs) was combined with public data from the Internet (Metz 2021). In essence, GPUs are compact and inexpensive supercomputers, largely marketed to gamers but useful in other capacities, like AI. A GPU sold in 2021 by NVIDIA, a computer hardware manufacturer, for instance, was about as powerful as a supercomputer from the first decade of the twenty first century (Liu and Walton 2021). GPU hardware is particularly adept at processing large batches of text or image data in parallel, substantially cutting down the time needed to train neural networks. This led to a breakthrough in Stanford University’s Imagenet Large Scale Visual Recognition Challenge benchmark in computer vision (Russakovsky et al. 2015), where the task involved having the computer automatically label an image based on what was depicted within it. It also led to the mistaken popular notion that a viable model of general intelligence had been developed by computer scientists.

Deep learning would go on to dominate the fields of computer vision and natural language processing, but it didn’t replicate all of the competencies of human intelligence. Computer scientists were creating perceptual systems, not cognitive ones—that is to say, systems that replicated parts of human sensory processing like vision and audition, not cognitive facilities like reasoning or consciousness. To make the distinction is not to downplay the achievements of engineering here—what modern deep-learning systems can do is absolutely astounding. In particular, the class of systems known as generative models is most impressive. A generative model is an AI system that is able to synthesize new content based on what it knows about the world (a creative example is shown in fig. 1.1). The notorious deepfake algorithm (Paris and Donovan 2019), which reanimates recorded video of a person, is an example of a generative model, as is the GPT-3 language model from OpenAI (Brown 2020), which is able to write comprehensible passages of text. It is here that we truly see the interplay between the material posted to social media and the neural networks that learn from such data: human and machine generated artifacts are now indistinguishable (Shen et al. 2021). This is arguably the greatest achievement of AI so far, panic about deepfake aside.

Contemporary trends in technology have assembled the global population, troves of multimedia data, overwhelming processing power, and state-of-the-art AI algorithms into an ecosystem that looks uncannily similar to the myth cycle described by Lévi-Strauss. If a realignment of society and myth has indeed taken place on the Internet, it is hardly surprising that madcap meme movements like QAnon (Zuckerman 2019), WallStreetBets (Banerji et al. 2021), and Dogecoin (Ostroff and McCabe 2021) have all become increasingly visible during the most anxiety-ridden period of the twenty-first century. Digital memes have replaced physical memes, meaning any facet of reality, including major news stories, can be reworked on the fly and repackaged for the Internet via human or machine intervention. Contrarian thinkers like Maçães and New York Times opinion columnist Ross Douthat have commented on the vast potential of this new instantiation of the myth cycle to break the world out of years of cultural, political, and economic stagnation by presenting alternatives that would ordinarily be unthinkable in an exclusively rational environment.

Douthat in particular has argued for a model of the Internet as a meme incubator. Using the example of Elon Musk’s electric car company Tesla, which requires vast amounts of capital to achieve its sci-fi tinged goal of producing an entirely sustainable and autonomous automobile, Douthat has explained that a corporation in the twenty-first century can be a meme, engaging with the Internet in unconventional ways to raise enthusiasm—and subsequently more capital. This, he has said, is representative of how the meme incubator works:

And it isn’t just evidence that fantasy can still, as in days of yore, remake reality; it’s proof that under the right circumstances the internet makes it easier for dreams to change reality, by creating a space where a fantasy can live independent of the fundamentals for long enough that the real-world fundamentals bend and change. (Douthat 2021a)

Here again is where the past is diagnostic—this is not a phenomenon new to the Internet. It has been unfolding for many years but is only now reaching fruition. And to understand it brings a certain optimism that otherwise seems impossible in the face of so much negative press surrounding technology at the present moment.

So what is this book about? In a nutshell, we will take a deeper look at the technological trends and individual incidents that have shaped the development of labyrinthine alternate realities on the Internet. This inquiry will span from the invention of the camera to current developments in AI. Familiar arguments about the origin and operational purpose of fake content, which tend to filter everything through the lens of contemporary American politics, will be strictly avoided. Instead, the spotlight will be on the history of four influential communities that haven’t received much recognition for their contributions to the rekindling of the myth cycles but whose dreams have become reality with startling regularity: computer hackers, digital artists, media-forensics specialists, and AI researchers.

Our explorations will draw on methodologies from a diverse group of disciplines. In an anthropological mode, we will consider reality to be a domain defined by both thought and the world—the latter crossing both physical and virtual spaces (Lévi-Strauss 1981). Thus we will proceed by scrutinizing messages, not the integrity of the medium conveying them. An understanding of how various communications mediums work is still essential though, as one needs to gauge their effectiveness for the task of participatory storytelling. For this we will draw on mass communication theory, which by the mid-twentieth century had largely predicted what would occur on the Internet (McLuhan 1994). Computer science also lends a hand for an informed discussion on the design decisions and technical operation of relevant infrastructure and algorithms. As we will see, even the technical aspects have an exceedingly strong social component, which is often obfuscated by programming languages and engineering lingo (Coleman 2016). And we will stay far away from alarmism, instead seeking pragmatic ways to draw distinctions between instances of fakery that are significant engines of cultural creation and those that pose a threat due to their message (Vallor 2016). When possible, we interrogate the very people involved in a case of interest, instead of merely extrapolating based on whatever they have fabricated. Appropriately to our object of study, at times their words will both clarify and beguile.

Each chapter is presented as a case study of sorts, looking at the roots of a specific technological trend. Thus while the book is organized chronologically, it can still be read in any order without losing too much essential context. Each trend was chosen as a pertinent example of how myths become tied to new mediums of communication. We will learn about hackers who fooled the news media (chapter 2); the history of darkroom and digital photographic manipulation (chapter 3); how stories were told through digital textfiles (chapter 4); the symbiotic development of media forensics and digital photographic manipulation (chapter 5); the origins of shock content (chapter 6); AI systems that make predictions about the far-off future (chapter 7); and the creative spaces of the Internet that are restyling reality today (chapter 8). As we will see, the four communities of interest intersect in surprising ways, demonstrating the fluidity of computer science—an asset that allows the discipline to rapidly parlay serendipitous interactions into innovations.

1. This argument was made famous in the twentieth century by the classicist Milman Parry (Parry 1971). More recent scholarship has analyzed how this process itself has evolved over time to influence modern content (Frosio 2013).

2. While the discussion here is situated in the West, the phenomenon can be observed in Eastern pottery as well (Wells 1971).

3. Athenian vases were mass-produced in the hundreds of thousands in the sixth and fifth centuries BCE. Because of these enormous production runs, a trove of pottery has survived into the present (Mitchell 2009).

4. The Doge is a self-confident appearing Shiba Inu invoked to make lighthearted social commentary; see https://knowyourmeme.com/memes/doge. Wojak is a stock meme character that works in humorously tragic circumstances; see: https://knowyourmeme.com/memes/wojak.

5. https://knowyourmeme.com/memes/meme-man.

6. Neil deGrasse Tyson is one of the most prominent scientists promoting this misrepresentation of history, under the pretense of his rationally grounded expertise: https://www.youtube.com/watch?v=xRx6f8lv6qc.